GPT-4: OpenAI’s Latest Breakthrough in Deep Learning

OpenAI has made a significant breakthrough in deep learning with the introduction of GPT-4, a large multimodal model that accepts both image and text inputs. This latest milestone in OpenAI’s efforts to scale up deep learning offers improved factuality, steerability, and adherence to guardrails compared to GPT-3.5. Let’s take a closer look at what we can expect from this latest model.

Watch OpenAI’s live presentation:

What we can expect – key takeaways

- New model exhibits human-level performance on various professional and academic benchmarks

- Improved factuality, steerability, and adherence to guardrails compared to GPT-3.5

- Built on new deep learning stack and co-designed supercomputer with Azure

- Text input capability released via ChatGPT and API (with waitlist)

- Image input capability being prepared for wider availability through partnership

- Open-sourcing OpenAI Evals for automated evaluation of AI model performance

- GPT-4 is more reliable, creative, and capable of handling nuanced instructions compared to GPT-3.5

- Outperforms existing large language models on traditional benchmarks

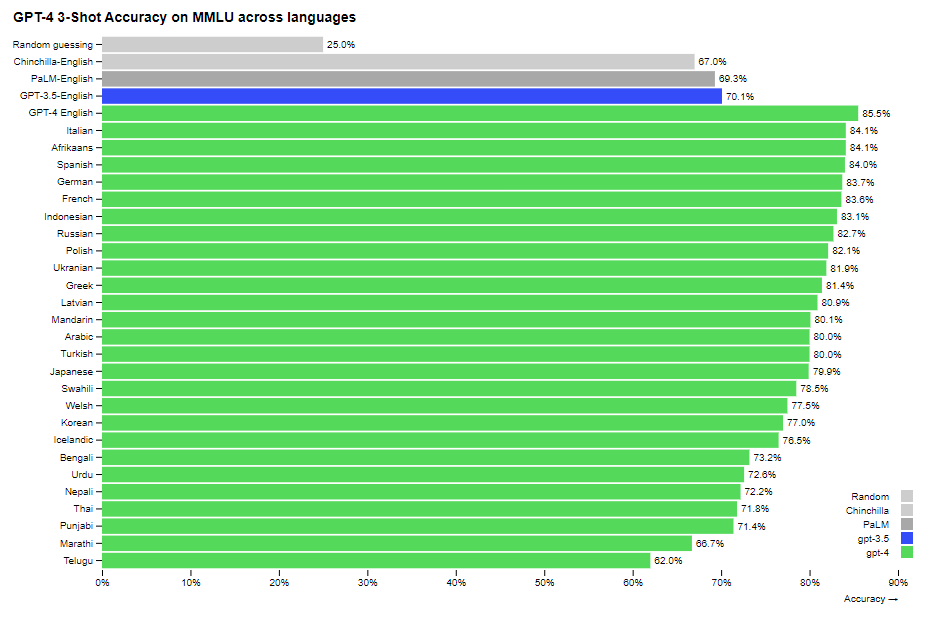

- Shows improved performance across multiple languages, including low-resource languages

- Visual inputs (image-based) still in research preview and not publicly available

Table of Contents

Improved Capabilities

GPT-4 exhibits human-level performance on various professional and academic benchmarks and outperforms existing large language models on traditional benchmarks. The model’s improved capabilities stem from its development on a new deep learning stack and a co-designed supercomputer with Microsoft Azure. Text input capability is already available via ChatGPT and API, with image input capability currently in preparation for wider availability through a partnership. Additionally, OpenAI has open-sourced OpenAI Evals, which enables automated evaluation of AI model performance.

GPT-4 is more reliable, creative, and capable of handling nuanced instructions compared to GPT-3.5. It shows improved performance across multiple languages, including low-resource languages, making it a valuable tool for various functions like support, sales, content moderation, and programming, which are currently being used internally. However, visual inputs based on images are still in research preview and not publicly available.

Focus on Safety and Usefulness

It’s claimed GPT-4’s focus on safety and usefulness sets it apart from its predecessors, with enhanced creativity, collaboration, problem-solving, and general knowledge. The model’s improved safety and alignment come from six months of work on alignment and safety improvements. Additionally, it is 82% less likely to respond to disallowed content and 40% more likely to produce factual responses than GPT-3.5.

Training with Human Feedback

OpenAI explains that one of the key features of GPT-4 is its training with human feedback, allowing for continuous improvement based on real-world use. OpenAI has also collaborated with organizations like Duolingo, Be My Eyes, Stripe, Morgan Stanley, Khan Academy, and the Government of Iceland to integrate the model into innovative products.

Collaboration with Organizations

GPT-4 has been integrated into innovative products by various organizations and is available through ChatGPT Plus and an API for developers. Some notable collaborations include Duolingo, which is using GPT-4 to develop language learning tools, and Be My Eyes, which is using the model to help visually impaired individuals. Other organizations using GPT-4 include Stripe, Morgan Stanley, Khan Academy, and the Government of Iceland.

Addressing Known Limitations

Despite its advancements, OpenAI acknowledges that there are still known limitations that need to be addressed, such as social biases and hallucinations. The company continues to work on resolving these issues, with GPT-4-assisted safety research and a focus on addressing known limitations.

Implications for AI

GPT-4 is trained on Microsoft Azure AI supercomputers and is a significant advancement in AI capabilities, with potential applications in various domains. As Greg Brockman, CEO of OpenAI, notes, “GPT-4 is the next step in our long-term vision for AI, building a system that can help us solve a wide range of problems across a diverse set of industries and use cases.”

While GPT-4’s advancements in AI capabilities are impressive, some experts remain sceptical about the safety and ethical implications of this technology. Despite OpenAI’s focus on safety and usefulness, questions remain about whether companies will prioritize safety in their use of GPT-4 or if this is merely the AI equivalent of “greenwashing.” Furthermore, the potential applications of GPT-4 in various domains raise concerns about the displacement of human workers and the potential for bias and discrimination. As with any new technology, it is important to approach GPT-4 with a critical eye and consider the potential consequences of its widespread use.